Why Regulation Could Reshape Not Just How Games Work — But How They Feel

This article offers a practical breakdown of how the European AI Act could reshape games — how they’re built, how they play, and how they feel. The regulation is complex, evolving, and this is not legal advice. It’s a guide, not the final word — but a timely one. You might see things differently, that is fine. I thought to publish it so we can all take note, learn and improve on how we use AI in our work-mode. Be gentle! ;-)

INTRODUCTION — THE CLOCK IS TICKING

On August 1st, 2024, the European Union activated the world’s first sweeping legislation to regulate artificial intelligence: the AI Act (AIA). With it, a new legal reality began for every AI-powered product on the European market — including video games.

Most studios didn’t blink. A few added it to their compliance radar. But as of February 2nd, 2025, the first enforcement deadline passed — and by August 2nd, 2027, every AI system in every EU-marketed game must be compliant. That includes AI that changes difficulty, reacts to player behavior, or personalizes storylines.

If you’re building games that learn, adapt, or react — this law affects you.

Why This Matters Now

The AIA doesn’t target gaming specifically — but its reach is sector-agnostic, and its language is technology-neutral. So if your system uses adaptive algorithms, behavioral profiling, or biometric prediction — it could be classified as high-risk, or even prohibited under EU law.

This isn’t theoretical.

- Epic Games is under scrutiny for its generative AI-controlled NPCs.

- EA’s Dynamic Difficulty Adjustment is a real-world use case for profiling.

- Ubisoft’s AI-assisted writing tools are exactly the kind of systems that fall into “transparency + human oversight” zones.

- And indie studios are already removing emotion-based gameplay loops from prototypes over legal fears.

Read also: May 2025 Gaming Surge: From Survival Mode to $7.8B in Game Deals

The Bigger Shift: Regulation of Emotion, Behavior & Influence

The AIA isn’t just about software. It’s about how software interacts with people.

It creates legal thresholds around:

- Manipulation

- Addiction design

- Profiling

- Emotional targeting

- Transparency

These are exactly the tools that modern game AI increasingly relies on.

That’s why understanding this regulation now — not in 2026 — is critical. Whether you’re a designer, publisher, investor, or founder, the coming years will determine whether your game is:

- a compliant, trusted product in the EU, or

- blocked, fined, or labeled “non-conforming AI” with no CE mark.

DISSECTING THE LAW — WHAT THE AI ACT ACTUALLY REGULATES

The European AI Act (AIA) is structured around three core pillars — each with major implications for gaming. While much attention has gone to its ethical principles and headline bans, the real power of the AIA lies in its legal architecture: how it classifies AI systems, enforces accountability, and imposes operational obligations.

The definition of AI? Read the wiki.

Here’s what every developer and publisher needs to know.

PILLAR 1: RISK-BASED CLASSIFICATION (ARTICLES 5–6 + ANNEXES)

The AIA divides AI systems into four categories, depending on how much influence they exert on people’s behavior, health, safety, or rights:

1. Prohibited AI

Outright banned (Article 5). Includes:

- Systems that exploit children or vulnerable users

- Emotional manipulation through AI (e.g. addictive gameplay loops)

- Real-time biometric surveillance without consent

A hyper-engaging loot mechanic that uses voice stress or player fatigue to trigger spending could fall under this.

2. High-Risk AI

Permitted, but only if the system:

- Undergoes strict assessment (CE marking)

- Is documented and registered

- Includes human oversight, transparency, and fallback procedures

Gaming examples that may qualify:

- Dynamic difficulty that adjusts based on player profile

- AI that predicts player churn or frustration

- Monetization AI that nudges behavior based on gameplay patterns

These fall under Annex III, which includes:

- Education

- Employment

- Biometric & behavioral profiling

- Access to essential services (which some argue gaming may indirectly relate to via digital wellbeing)

3. Limited Risk

These must meet transparency requirements:

- AI chatbots

- AI that generates art or content when prompted

- Cosmetic personalization features

Players must be informed clearly that they’re engaging with AI.

4. Minimal Risk

No restrictions, no compliance required.

- Classic enemy pathfinding

- Static rule-based NPCs

- Procedural generation without feedback loops

PILLAR 2: ETHICS & TRANSPARENCY OBLIGATIONS

While the first pillar governs classification, the second embeds a code of conduct:

- Human agency and oversight

- Technical robustness and safety

- Data governance

- Transparency

- Fairness and non-discrimination

- Environmental and societal wellbeing

- Accountability

Game systems using generative AI — especially ones that influence outcomes (e.g. quest paths, moral decision trees) — must meet these criteria to avoid future scrutiny or penalties.

PILLAR 3: ENFORCEMENT, CE MARKING, AND LIABILITY (ARTICLES 48–55)

This is where the law bites.

- CE marking becomes mandatory for all high-risk systems.

- Systems must undergo conformity assessment (self-assessment or third-party review).

- Providers must maintain technical documentation proving compliance.

- There will be national enforcement bodies, meaning regulation can vary by country (e.g. Germany stricter, Greece slower).

Penalties for non-compliance:

- Up to €35 million or 7% of global turnover, whichever is higher

If your studio sells to the EU and your AI system adapts to users without the proper disclosures, you’re now within enforcement range.

Read also: From $80 Games to $5B Hits: Inside Gaming’s Turbulent Transformation in May 2025

SECTION 3: WHAT COUNTS AS AI IN GAMES?

At first glance, the AI Act seems aimed at facial recognition, chatbots, or autonomous vehicles. But the definition of AI in Article 3 is technology-neutral and intentionally broad — and it captures many systems already baked into modern games.

💬 “AI system” means any software that, for explicit or implicit objectives, infers how to generate outputs like predictions, content, recommendations, or decisions that can influence physical or virtual environments. — AIA Article 3.1

That includes… games.

Systems in Games That Qualify as AI

If you’re wondering whether the AIA applies to your project, here’s a checklist based on current guidance and legal interpretation:

Systems Likely Covered

- Dynamic Difficulty Adjustment (DDA) Adjusts gameplay based on player behavior. If adaptive and behavioral → likely high-risk

- Behavioral Prediction Engines Used for retention, churn modeling, or monetization triggers

- Emotion or Biometrics-Based AI Systems that track facial expressions, voice stress, eye movement, or physical input to adjust experience

- Generative AI Tools Auto-writing dialogue, ambient lore, or quest lines — especially if adaptive or responsive

- AI Moderation Tools Systems that assess player toxicity or behavior and take automated action

- Personalized Game Recommendations If part of the game system itself (not storewide), and tied to profile → under scrutiny

Systems Likely Excluded (Minimal Risk)

- Static AI enemy routines

- Rule-based branching dialogue

- Procedural content with no player-driven learning loop

- UI personalization that doesn’t affect gameplay outcomes

If your AI changes its behavior over time, based on input data or profiling, it likely meets the AIA threshold.

Grey Zones: When Game Logic Becomes “AI”

Here’s where it gets tricky: not all ML or personalization is “AI” under the AIA — but many are treated as such if they infer and adapt to users.

Examples:

- A skill tree that unlocks based on behavior = fine.

- A system that learns what kind of player you are and subtly adjusts loot drops = high-risk.

- An assistant that gives you tips = okay.

- An assistant that gives tips based on predicted frustration or likelihood to churn = profiling → high-risk.

Indie Games Are Not Exempt

Even if you’re:

- Under 5 employees

- Not collecting data

- Not based in the EU

… you are still subject to the AI Act if your game is sold or used in the EU and contains qualifying AI components.

Jurisdiction is determined by where the user is, not where the studio is.

RISK LEVELS IN ACTION — MAPPING GAME AI TO THE AIA FRAMEWORK

The European AI Act doesn’t ban AI. Instead, it categorizes it into four graduated risk levels — and sets obligations accordingly. Every AI system in a game will fall into one of these categories. Knowing where your system lands determines everything: from documentation requirements to CE marking and even legal liability.

Let’s walk through each risk level — with real or plausible examples from the gaming world.

MINIMAL RISK — NO OBLIGATIONS

Definition: AI systems that pose negligible impact on users’ rights or safety. Think rule-based logic, hard-coded behaviors, or systems with no player data processing.

Examples in Games:

- Pre-scripted NPC behavior (non-adaptive)

- Fixed decision trees in quest lines

- Classic game AI (e.g., Pac-Man ghosts)

- Randomized enemy spawn patterns not based on user input

Result: No compliance burden. These are effectively exempt from the AI Act.

LIMITED RISK — TRANSPARENCY REQUIRED

Definition: Systems that interact with users, but don’t make sensitive or influential decisions. They must be clearly labeled as AI.

Examples in Games:

- Chatbot assistants or support bots (e.g., help menus, tutorials)

- AI narration tools (if players know it’s AI)

- Personalized UI layouts or theme adjustments

- Voice modulation overlays used in social games (e.g. disguise or roleplay)

Obligation: Inform the user they are interacting with an AI system — visibly and clearly. That’s it.

HIGH RISK — STRICT CONTROLS AND COMPLIANCE

This is where most modern adaptive game systems will land.

Definition: Systems that:

- Influence player behavior, or

- Use profiling, or

- Affect rights, or

- Operate in regulated domains (education, employment, health, etc.)

Examples:

- Adaptive monetization systems e.g. adjusting offers based on player spending or frustration

- Behavior prediction for retention e.g. scheduling content drops based on churn risk

- Emotion recognition to adjust pacing or difficulty

- Educational games with adaptive assessments e.g. learning games for kids that adapt level based on detected performance

Obligations:

- CE conformity assessment and technical documentation

- Human oversight measures

- Explainability of AI outputs

- Data governance protocols

- Registration in EU AI database

“This isn’t just dev documentation — it’s a regulatory file that courts can access if harm occurs.”

PROHIBITED AI — BANNED COMPLETELY

Definition: Systems deemed to carry unacceptable risk. This includes:

- Subliminal manipulation

- Exploitation of vulnerabilities

- Emotion-based surveillance

- Biometric classification without consent

Examples (in games):

- An AI system that monitors player webcam to detect sadness → and then triggers purchases

- Systems targeting players with addiction vulnerability (e.g. minors) to increase playtime

- Adaptive monetization tied to real-time emotional or biometric data

- Generative avatars or NPCs that impersonate real people without consent

Result: Illegal in the EU as of Feb 2025. No exceptions. Use = fines or criminal exposure.

Key Insight: “Most risk comes not from the AI itself, but from what the AI is used to influence — behavior, spending, or emotional state.”

THE THIRD PILLAR — CE MARKING, ENFORCEMENT, AND DEVELOPER LIABILITY

Much has been said about the AI Act’s risk classifications. But few in gaming are aware of the “third pillar” — the enforcement and conformity framework that determines who is liable, how certification works, and what happens if something goes wrong.

Without this pillar, compliance remains a theoretical exercise. With it, the legal weight becomes real.

Let’s break it down.

What Is the “Third Pillar”?

The AI Act is built on three enforcement levels:

- Risk Classification (what kind of AI it is)

- Obligations & Technical Requirements (what you must do)

- Conformity & Liability — the third pillar: How systems are certified What CE marking requires Who bears legal responsibility Which EU country enforces the rules

This third pillar translates abstract rules into operational mandates — with real consequences for game developers and publishers.

What Is CE Marking — and Why Games Now Need It?

CE marking isn’t new. It’s used for everything from toasters to toys — to show that a product meets EU safety standards.

Under the AI Act, certain software systems now also require CE marking, including:

- High-risk educational games

- AI monetization or retention systems

- Biometric-based features

- Generative AI-driven mechanics that affect player behavior

This means:

- A technical file must be created

- Independent assessment may be required

- A declaration of conformity must be signed

- CE logo must be affixed if sold in the EU

In games, this creates a regulatory burden similar to releasing a physical device.

What’s in the Technical Documentation?

Every high-risk system must include:

- AI system description

- Purpose and design logic

- Data governance policies

- Risk mitigation methods

- Human oversight measures

- Validation and testing records

Think of it as an open-book exam: if a regulator or harmed user sues, this is your legal defense.

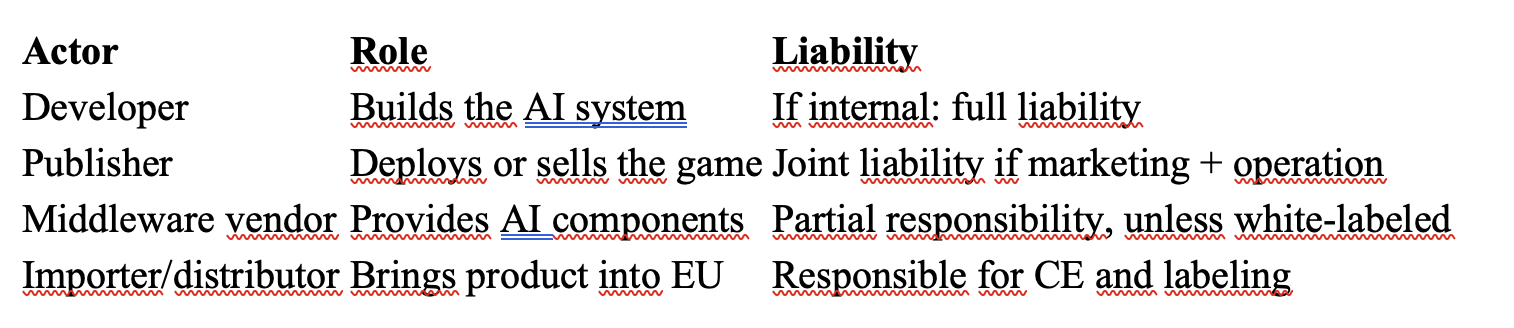

Who Is Liable for Compliance?

Responsibility under the AI Act follows this chain:

If you’re using tools like Unity Muse, Inworld, or Modulate.ai, shared liability applies unless they offer built-in compliance.

Who Enforces the AI Act?

Every EU country has its designated authority:

- Netherlands: Dutch Authority for Digital Infrastructure

- Germany: Bundesnetzagentur (BNetzA)

- France: CNIL

- Spain: AEPD

- Poland: UODO

Your company’s registered address determines which authority oversees your game’s AI compliance. This means:

- A game developed in Sweden and published in Spain could be liable in both countries

- Developers may forum-shop to locate HQs in lighter jurisdictions (e.g. Greece or Romania, which haven’t yet built enforcement teams)

What Happens If You Violate the AI Act?

- Fines of up to €35 million or 7% of global turnover

- Mandatory product recalls

- Ban on further distribution in EU market

- Criminal liability for deliberate non-compliance (in some member states)

Key Insight: “For game studios, CE compliance may become as critical as PEGI ratings — but with legal, not just content, implications.”

DEVELOPER CASE STUDIES — UBISOFT, EPIC, SONY & THE ROAD TO COMPLIANCE

With the EU AI Act now in force, theory is turning into practice. Behind closed doors, major studios are reworking their pipelines, auditing tools, and in some cases—scrapping features altogether.

This section examines how key players are adapting. These aren’t just examples — they’re strategic responses that hint at where the entire industry is heading.

Ubisoft: Compliance by Design

Ubisoft has long been an early adopter of AI in design and worldbuilding. But with the AI Act looming, they’ve shifted toward a more cautious, proactive approach.

What They’re Doing:

- Using AI writer assistants only for non-critical quest text and lore

- Requiring human-in-the-loop validation for every generated narrative

- Running internal “AI certification sandboxes” to simulate CE workflows

“We’re prototyping compliance, not just games,” one R&D lead told French regulators in 2024.

Takeaway: Ubisoft is embedding compliance as a design principle, not just a legal requirement.

Epic Games: Pushing Limits, Facing Consequences

Epic embraced generative AI early — especially with Fortnite’s adaptive NPCs like the AI-controlled Darth Vader.

But by 2024, backlash hit:

- Voice actor unions condemned the use of synthetic speech

- Players demanded disclosure after NPCs began reacting to behavioral data

- Regulators flagged Epic’s systems as potentially “high-risk” due to profiling

Under Article 52, Epic must now:

- Explicitly disclose AI interactions

- Prove transparency in player data use

- Submit high-risk system reports to authorities

One developer insider noted: “We went from innovation to investigation in 6 months.”

Sony: Quiet Compliance with a Player-First Ethos

Sony has taken a more conservative path, investing in long-term research and player agency.

Their AI NPCs:

- React to player decisions across sessions

- Adapt difficulty based on opt-in memory

- Give users control over data retention and AI personalization

Under the AI Act, these design choices check multiple boxes:

- Informed consent

- Human oversight

- Risk minimization

Sony’s approach may be the blueprint for ethical compliance: personal, transparent, and user-controlled.

Smaller Studios: Innovation Meets Caution

Multiple mid-sized and indie developers have quietly:

- Rolled back biometric-based difficulty systems

- Removed “emotion-reading” ads from betas

- Switched from ML to static systems to avoid CE documentation

For startups, compliance is a cost — and a risk — that may delay go-to-market plans.

Key Takeaway: “It’s not just about building smarter AI. It’s about building systems that can be defended in court, explained to parents, and justified to regulators.”

THE GAMER’S VOICE — FROM CONSENT TO CONTROL

While studios scramble for compliance, gamers are asking a more fundamental question: What does “fair” AI look like in games? The conversation has shifted from hype to ethics — and it’s happening fast.

This section unpacks the cultural shift underway: where players go from passive consumers of innovation to active watchdogs of AI ethics.

Gamers Are No Longer Silent on AI

Reddit, Discord, Twitch — the conversation around AI in gaming has exploded.

Trending threads and user comments from Q2 2025 show a notable shift:

- “Was that NPC real or AI?”

- “This difficulty spike feels like manipulation.”

- “Why didn’t they tell us this boss learns your timing?”

These aren’t edge cases. They’re daily debates.

Google Trends (EU, March–May 2025):

- “AI game ethics” → +22%

- “AI difficulty transparency” → +30%

- “Fortnite NPC real or AI” → +70%

Players aren’t rejecting AI. They’re rejecting hidden AI.

Key Concerns from Players

- Informed Consent “If an AI tracks my behavior, I want to know — and opt out.”

- Data Transparency “Is my emotional or biometric data being used?”

- Manipulation vs. Engagement “Is this system helping me… or nudging me toward a purchase?”

As one player posted: “I don’t mind AI. I mind being manipulated without knowing it.”

Case Study: Biometric Personalization Gone Wrong

A VR indie studio tested an AI system that adjusted game pacing based on heart rate and pupil dilation. Early testers loved it — until they found out.

The studio hadn’t disclosed:

- How data was stored

- Whether it was linked to other identifiers

- What would happen post-session

Player reviews tanked. Trust evaporated. The feature was pulled.

Under Article 6 and Article 52 of the AI Act, this would now require explicit consent, human oversight, and likely CE marking.

Transparency = UX

Some forward-looking studios are treating transparency as user experience, not just legal text.

Examples:

- Toggle switches: “Allow AI personalization?”

- HUD pop-ups: “This enemy adapts to your playstyle using AI.”

- Consent flows: Before installing, players choose AI engagement levels

Gamers respond better when they’re treated like stakeholders — not subjects.

Key Takeaway: “The EU AI Act isn’t killing immersion. It’s giving players back control over how that immersion is created.”

THE THIRD PILLAR — ENFORCEMENT, PENALTIES & LEGAL GREY ZONES

The first two pillars of the AI Act — risk classification and obligations — define what must be done. But the third pillar determines what happens if you don’t.

This is the real pressure point for the gaming industry. Enforcement defines not just risk — but exposure.

Who Enforces the AI Act?

The AI Act isn’t enforced by Brussels. Instead, it delegates power to national supervisory authorities within each EU member state.

- Germany: Federal Office for Information Security (BSI)

- Netherlands: Agentschap Telecom (Digital Trust Center for AI)

- France: Commission Nationale de l’Informatique et des Libertés (CNIL)

Each country sets its own internal procedures — but all operate under a unified EU regulatory framework coordinated by the European Artificial Intelligence Board (EAIB).

Think of it like GDPR: EU-wide law, but local regulators.

What Are the Penalties?

Here’s where it gets serious — and underreported in most coverage.

The AI Act enables regulators to issue fines up to:

- €35 million or 7% of global annual turnover (for prohibited AI use)

- €15 million or 3% of global turnover (for high-risk non-compliance)

- €7.5 million or 1.5% (for incorrect documentation or missing transparency disclosures)

These figures surpass even the GDPR’s upper limit of 4%.

For context:

- A studio like CD Projekt Red could face fines of €10M+ for high-risk personalization systems without proper CE certification.

- Even smaller studios, if selling in the EU, fall under these thresholds based on revenue — not company size.

Legal Uncertainty: What Counts as “High Risk”?

Here’s the grey zone most developers worry about:

- An AI system that adapts to player frustration might be called engagement.

- But if that system nudges timing of in-game purchases, it could be reframed as behavioral manipulation.

Under Recital 38 and Article 6, this hinges on whether a system:

- Uses profiling or inferred data

- Affects significant player decisions (e.g., spending, time-on-device)

- Has potential for psychological harm or exploitation

If so? High-risk. And subject to CE marking and full compliance stack.

What Is CE Marking for Software?

CE marking — familiar in hardware and toys — now applies to high-risk AI software, including:

- AI used in education, employment, and biometric processing

- Game features that adapt to personal data without clear consent

To qualify, developers must:

- Undergo conformity assessment

- Maintain technical documentation

- Include human oversight mechanisms

Example: A VR game using gaze tracking for difficulty modulation might need to pass CE marking, especially if targeting minors or health outcomes.

Exploiting National Divergence?

Some have speculated that companies could “forum shop” — registering in a more lenient country like Greece or Bulgaria.

However:

- The AI Act applies where the system is used, not just developed.

- So if your game is sold or used in Germany, German enforcement rules apply — even if you’re headquartered elsewhere.

There are countries that haven’t fully accepted all AI Act provisions (as per internal government sources and lobbying leaks), but the margin for gaming is extremely narrow.

A financial structure workaround doesn’t equal a compliance loophole.

Legal Takeaway: “The AI Act flips the burden. It’s no longer ‘prove harm.’ It’s ‘prove safety.’” — EU Parliament Rapporteur on the Act, 2024

COMPLIANCE ISN’T THE END — IT’S A STRATEGIC ADVANTAGE

The European AI Act is often viewed as a restriction. But for forward-looking studios, it’s becoming a market differentiator.

Instead of asking “How do we avoid penalties?” the smartest developers now ask:

“How do we turn compliance into trust, visibility, and long-term value?”

Let’s break down how.

1. Ethical Design Becomes a Competitive Signal

Today’s players — especially in Europe — are not passive.

They’re asking:

- Is this game nudging me to spend?

- Am I being profiled without knowing it?

- Is this AI fair, or just persuasive?

Studios that disclose AI features clearly, offer opt-outs, and show ethical restraint will:

- Attract more loyal users

- Build media goodwill

- Earn platform preference, especially from consoles and stores under EU pressure

Survey Insight (April 2025, Ipsos EU): 61% of players aged 18–35 said they would prefer a certified game over one that “hides how its AI works.”

2. New Trust Labels Are Coming

The EU may introduce a voluntary compliance label, much like the “Energy A+” label or organic food seals.

- Visual AI labels could appear in store listings

- Games with CE-marked features may receive enhanced visibility or parental filters

- Youth-oriented platforms (e.g. Switch, educational apps) may require it in the future

Imagine a PEGI-style badge — but for AI ethics.

3. Public Sector & Institutional Markets

Education and government-funded platforms are watching the AI Act closely. They need compliant, safe, explainable games — especially for:

- EdTech

- Recruitment simulations

- Youth development programs

Studios that align early with the AI Act are already in talks for:

- EU Horizon grants

- Municipal gaming pilots

- Public–private innovation funds

A 2026 study from the European Schoolnet Initiative will assess which games meet AI safety standards. This could be a springboard.

4. Investors Are Now Asking

Post-GDPR, data compliance became a standard due diligence question in VC rounds. The same is now happening with AI compliance.

Investors want:

- Documentation of ethical risk assessments

- A clear stance on player data and behavioral AI

- CE-readiness if applicable

“A startup with AI features but no AIA plan is now a liability — not a moonshot.” — Partner, Balderton Capital (April 2025)

5. Middleware & Tooling Will Adapt — Use It

Unity, Inworld.ai, Modulate, and others are already adapting their APIs and SDKs to:

- Provide pre-certified modules

- Include consent layers

- Auto-generate risk classification guides

Smart developers will build on top of compliant foundations, not retrofit their stack in 2026.

Strategic Summary:

Compliance ≠ cost center. It’s a signal — to players, regulators, funders, and platforms — that you’re ready for what’s next.

WHAT GAME DEVELOPERS SHOULD DO NOW — A TACTICAL CHECKLIST

The European AI Act is no longer theoretical. The countdown to enforcement has started. Studios that wait until 2026 to act will be racing against regulators, not competitors.

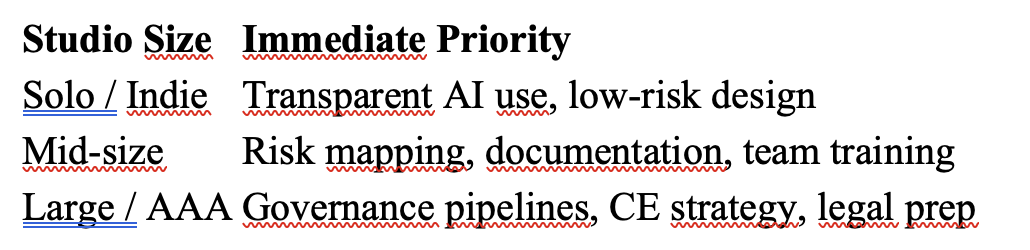

This section breaks it all down into practical steps, grouped by studio size and AI maturity.

For All Game Studios — Your Core Action Plan

No matter your size, if you’re using AI in any form, do this now:

1. Audit Your AI Systems

- Map every feature in your game that involves AI — from enemy logic to personalization to anti-cheat.

- Identify which features learn or adapt based on player behavior.

- Flag those that might influence spending, emotions, or long-term engagement.

2. Classify the Risk

- Use the EU’s risk pyramid (Minimal, Limited, High, Prohibited) as a framework.

- Focus especially on: Dynamic difficulty Player profiling Emotion detection Monetization-linked AI

- If unsure, assume high-risk and document reasoning.

3. Add Transparency Layers

- Clearly disclose AI use in your game interface or onboarding.

- Provide a “learn more” option for curious or concerned players.

- Where needed, offer opt-outs or manual overrides.

4. Document Everything

- Create a “Technical File” with: AI system purpose and design Training data sources Risk assessments Human oversight methods

- This will be required for CE compliance and legal defense.

5. Train Your Team

- Developers, designers, and writers must all understand: What counts as AI under the Act How their choices impact risk classification How to design with transparency and opt-in mechanics

For Medium to Large Studios — Build an AI Governance Pipeline

If you’re shipping globally or working on complex AI systems:

1. Appoint a Compliance Lead

- Someone must own the AIA process — think of this as your “AI DPO.”

2. Set Up Internal Audits

- Before launch, test AI systems for manipulation, profiling, or risk of addiction.

- Simulate edge cases: what happens if a child plays? Or a user with a disability?

3. Liaise With Legal & Certification Bodies

- Engage early with Notified Bodies authorized to issue CE certificates for AI systems.

- Track updates to harmonized standards — the AIA rulebook will evolve.

4. Consider Ethical Design as Brand Strategy

- Launch campaigns around safe, ethical, player-first AI.

- Add trust labels, explainability features, and parental control documentation.

For Startups & Indies — What You Can Do Now

Even small teams must prepare. Here’s a leaner version of the plan:

1. Focus on Disclosure First

- Use tooltips, menu banners, or Discord announcements to inform players when they’re interacting with AI.

2. Avoid High-Risk Use Cases

- Unless essential, don’t build features that adapt to user psychology or profile behavior.

- If you do, make sure there’s human override.

3. Pick Smart Tools

- Choose AI services (Unity Muse, Inworld.ai, etc.) that offer AIA documentation or risk-limiting design by default.

4. Join Developer Alliances

- Participate in forums (e.g. EGDF, AI4Games, GameDev.World) to stay updated and collaborate on shared certification frameworks.

Don’t Wait for Enforcement. Lead With Readiness.

AIA-readiness is no longer a luxury. It’s a requirement for anyone serious about operating in the EU — and a sign of leadership to the rest of the world.

The Industry’s New Social Contract

The European AI Act isn’t about freezing innovation. It’s about reshaping the social contract between game makers and game players.

You can still use AI to amaze, immerse, and entertain.

But if it manipulates, profiles, or overrides choice — without consent — it now carries legal, ethical, and reputational risk.

Game AI used to be about smarter code. Now, it’s about smarter responsibility.